There’s a story in this morning’s Philadelphia Inquirer about the local government’s failure to collect delinquent real estate taxes:

“Between 2008 and 2011 – the last year for which complete data are available – Philadelphia’s one-year property-tax collection rate has averaged just 85.5 percent. That average is lower than that of any other any big city in the nation, including Detroit, and a full 10 percentage points below the average collection rate of the 20 biggest cities in the same period. Some cities, including Boston, Baltimore, and San Jose, Calif., routinely collect 99 percent to 100 percent.”

The story goes on to detail that while the administration of Mayor Michael Nutter insists that it has improved collections over the course of that time, collections continue to be below its own announced goals. Lagging property tax collections especially hurt the cash-strapped city public schools, which rely on these funds as a major source of income.

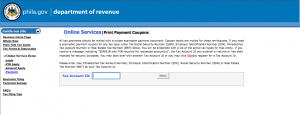

I have to wonder whether part of the problem is the city’s own outdated and confusing website. I don’t own property in Philadelphia, but I know many people who do, and I also know people who live in the city, work outside of it, and therefore have to make quarterly estimated wage tax payments. Despite the fact that millions of Americans have become accustomed to paying taxes online, the city of Philadelphia’s tax revenue website has basic usability problems, including the fact that unless you have a delinquent tax bill, the online payment link directs you to print out a payment coupon and mail in a paper check.

It is possible to pay delinquent taxes online, and it is also possible to pay some taxes by credit card. And there has been some improvement – I was able to access the payment section of the website in Safari today. Some months ago, I was told by a city employee that one could only view it in Internet Explorer.

But it takes a fair amount of time to sort through the site documentation to figure out what can be done where, and I have to wonder how much money and time is being lost as people try to comply with an unwieldy online system.

I would love to see follow-up stories exploring the challenge of modernizing and streamlining online revenue collection systems in Philadelphia and other large cities.